Razuna Digital Asset Management — Platform Modernization

At a glance

- Performance: Reduced database round-trips by 92% by removing N+1 query patterns.

- Security: Upgraded legacy credentials from MD5 → BCrypt (cost 12) with transparent migration on login.

- Reliability: Replaced fragile file-based search indexing with a database-backed queue approach.

- Developer experience: Delivered interactive API docs via OpenAPI 3.0.3 (Swagger UI, ReDoc, Stoplight).

- Operability: Consolidated admin workflows (System Health + Logs), shipped a scheduled maintenance task system, and documented day-to-day ops in a single user + operations manual.

- Media analytics: Built-in playback, completion, and bandwidth tracking (bytes transferred) to support usage reporting and capacity planning.

- Integrations: Implemented an API2 webhook foundation for asset events to reduce polling and enable event-driven workflows.

Where it started: Razuna is an open-source Digital Asset Management platform used in regulated environments where stability and auditability are non-negotiable. The problem wasn't the product—it was the foundation. The application was built on Open BlueDragon, a discontinued CFML engine, and every attempt to modernize the infrastructure widened the gap between "it still boots" and "it's safe to operate."

When the broader Razuna ecosystem shifted toward a cloud-only direction, the on-prem option became a forced choice: freeze on aging runtimes and accept compounding risk, or rebuild the platform so it could run cleanly on modern Java and still behave like the system operations teams trusted.

Chapter 1: Getting the platform back on solid ground

The first milestone sounded simple: make it run on a modern stack. In practice, it meant unwinding years of assumptions that were never tested against today's JVM. I led a full migration from Open BlueDragon to Lucee 7, first stabilizing on Java 21 and Tomcat 11, then upgrading forward to Java 25 once the platform was deterministic and testable.

Java 25's stricter bytecode verification then started surfacing CFML patterns that had quietly accumulated over time. Fixing them wasn't about "making the errors go away"—it was about restoring determinism. I replaced dynamic code evaluation patterns like evaluate() with safe struct lookups, removed brittle behaviors that triggered verifier failures, and tightened older integrations so they behaved predictably under modern JVM security rules.

Constraints we codified (Lucee 7 compatibility)

- Template hygiene: Avoid invalid closing tags (e.g.,

</cfelse>,</cfelseif>,</cfset>,</cfinclude>) and standardize CFML comments (<!--- ... --->). - Includes vs URLs: Keep

<cfinclude template="...">paths app-root relative; only use the context path for user-facing links/forms. - Output boundaries: Close

<cfoutput>before logic blocks to prevent confusing parse errors (the classic “unterminated#” symptom). - Control flow: Enforce rules like

cfbreakonly inside loops; keep error handling explicit (cftry/cfcatch). - Query safety: Default to

cfqueryparamin touched code to avoid both injection risk and legacy string-concatenation edge cases.

What the migration actually looked like (small samples)

- Conflicting auth gates: In a couple API paths, a newer auth check succeeded and a legacy helper immediately rejected the same request. The fix was choosing one authority and removing the redundant blocker.

- Schema qualification: Standardized schema-qualified table references in touched queries to eliminate environment-dependent “invalid object name” failures.

- Naming/prefix assumptions: A few workflows relied on implicit naming conventions; making those rules explicit removed a class of hard-to-reproduce runtime errors.

Chapter 2: Turning security into a feature, not a patch

Once the platform was stable enough to trust, the next question was unavoidable: what would an audit find? The security review wasn't academic—this is the kind of system that ends up adjacent to sensitive data, and a small weakness can become a big incident.

Part of the work was proving what was already good: the data layer was overwhelmingly parameterized (thousands of parameterized queries), and the remaining high-risk paths were brought into line so fixes weren’t isolated patches—they were repeatable patterns.

The most immediate red flag was credential storage. Legacy accounts used MD5 hashing—a broken primitive by modern standards. Rather than forcing a disruptive cutover, I implemented BCrypt (cost factor 12) with a transparent migration path: when a user successfully logs in, their legacy hash upgrades automatically. Security improves from day one, without a mass reset and without breaking existing users.

From there, the work became about turning "known web-app failure modes" into solved problems: brute-force protection that locks out after 5 failed attempts (15 minutes), separate rate limiting lanes for login, API calls, and password resets, and closing account enumeration leaks where an attacker could infer whether an email exists from error text or timing differences.

Chapter 3: Performance detective work in the database layer

With the platform modernized and safer, the next pain point was operational: under real usage, it felt slower than it should. Profiling and query analysis led to a familiar culprit—widespread N+1 query behavior where the ORM was quietly generating hundreds of round-trips that should have been a handful.

I treated it like detective work: identify the hot paths, reproduce the user flows, and then trace the database chatter back to the call sites. Across five major subsystems (DAM asset loading, custom fields, labels, collections, and folder operations), I refactored the data layer around batch queries and in-memory lookup maps. A concrete example: loading 25 assets with custom-field enrichment dropped from 26 queries (1 + 25) to 2 total—removing the latency spikes and cutting database round-trips by 92%.

Then I made the database work with the application instead of against it. Using SQL Server Query Store telemetry, I built indexes around real production query patterns: covering indexes like IX_AssetEvents_VideoStats and IX_AssetEvents_AssetLookup to eliminate key lookups, plus a filtered index on completion events that reduced index size by ~80% while keeping the hot reads fast. Query Store validation showed consistent sub-5ms execution times across core user flows.

Some wins were algorithmic rather than purely SQL. Folder operations were effectively O(n²)—nested loops that triggered a query per asset. I rewired those workflows to O(n) by issuing a single batch fetch with a WHERE IN clause and resolving values from a struct lookup. The result was immediate: folder browsing that took 2–3 seconds dropped under 200ms, even with hundreds of assets.

Chapter 4: Search reliability without fragile file indexes

Search was another "it works until it doesn't" subsystem. The legacy approach depended on file-based Lucene indexes that required manual maintenance and could drift or corrupt under operational stress. I migrated search to a database-backed queue architecture (using razuna.search_reindex) so indexing became transactional, recoverable, and self-healing—without regressing search performance.

Chapter 5: Making the API usable for real teams

Modernizing the internals only helps if teams can integrate with it confidently. The API existed, but the developer experience was essentially "read the source." I built an interactive documentation system around an OpenAPI 3.0.3 specification, including an automated export pipeline that transforms CFML API definitions into standards-compliant OpenAPI JSON.

To make it genuinely usable, I shipped three documentation viewers for different audiences: Stoplight Elements (modern browsing), ReDoc (clean three-panel layout), and Swagger UI (interactive testing). Integrations no longer require reading 4,000+ lines of CFML to understand request/response shapes—teams can test endpoints in-browser and generate client SDKs in any language.

To keep documentation from drifting, I packaged a repeatable workflow: a scriptable OpenAPI generation step, a lightweight “docs health” page to validate the JSON/viewers are live, and a set of copy/paste code examples so teams can get a first request working quickly.

Chapter 5.5: Webhooks for event-driven integrations

Beyond documentation, I added an API2 webhook foundation that supports subscriptions and scheduler-friendly delivery. Core asset events (create/update/delete) can enqueue deliveries, and a background worker can fan out events to external systems—reducing brittle polling and enabling real automation when teams are ready.

Chapter 6: Production hardening and operability

The last mile is where projects usually fail: production. I hardened the runtime as if it were a product, not a dev server—production-grade Tomcat 11 configuration, SSL/TLS posture, security constraints, and operational monitoring. Session management was tightened with 2-hour timeouts, secure cookie flags (httpOnly/secure/sameSite), and session rotation on authentication to mitigate fixation.

That hardening included the "boring" work that keeps teams out of trouble: tightening the Tomcat footprint (removing unnecessary admin surfaces), enforcing modern TLS, enabling HTTP/2, adding stuck-thread detection, and shipping a simple health endpoint that monitoring can check without scraping the full app. On the CFML side, I standardized structured, module-based logging with predictable rotation/retention so production incidents have an audit trail instead of a scavenger hunt.

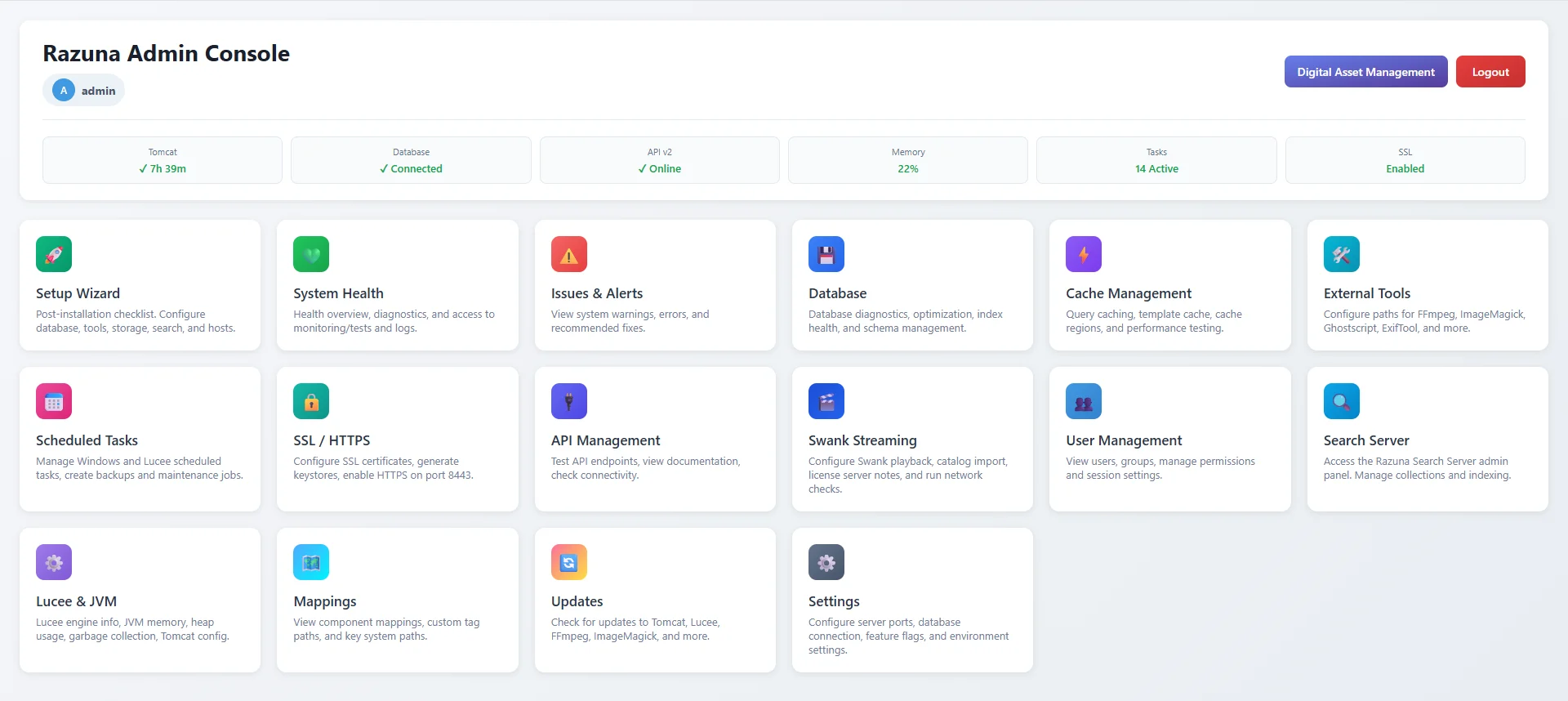

Because this is commonly deployed as a Windows Service, I also documented the operational realities (service-managed JVM settings rather than a startup script) and built admin-side tooling for day-to-day ops—health checks, a safer log viewer (restricted roots, file-count caps), and settings that control log retention and maintenance behavior.

To keep the system running optimally, I built a first-class scheduled-task model that supports both application-level jobs (Lucee scheduled tasks) and host-level automation (Windows Task Scheduler). This covers the routine work that keeps production stable—asset and temp cleanup, cache maintenance, search reindexing, database stats refresh, and periodic database maintenance—while making it visible, controllable, and auditable from an admin UI.

On the usage side, I added built-in media telemetry so teams can answer practical questions without guesswork: what’s being played, how often, and what it costs in bandwidth. The resulting dashboards summarize playback and completion trends, bandwidth consumption over a selected window, and recent activity—useful for both capacity planning and incident triage.

One subtle challenge: many “direct asset” video URLs are served as static files by the container and never touch CFML, which means you can’t reliably track streaming in application code. To close that gap, I added a lightweight servlet filter to intercept video asset requests and record play events and bytes transferred without ever blocking the stream (silent failure on database issues). A small cleanup job resets stale “in progress” flags so dashboards stay accurate.

On the media side, I built an FFmpeg-based caption pipeline supporting both embedded subtitle tracks and burned-in captions, with Intel Quick Sync Video acceleration for encoding performance where available. At the OS boundary, I removed unsafe patterns like world-writable temp script generation and brittle command construction—reducing both security risk and operational surprises.

Impact

This modernization turned Razuna from "legacy software we keep alive" into a secure, performant platform that runs cleanly on modern infrastructure. The security work closed critical vulnerabilities that could have exposed sensitive data in regulated deployments. The performance work reduced database load and stabilized response times under real usage. And the documentation work made the API approachable for engineers who need to integrate quickly and confidently.